Touchless technologies are going through a revival. Sheena Visram tells Johanna Hamilton AMBCS about this new era in inclusive and intuitive touchless computing.

From interacting with computers using hand and facial gestures, to gamifying exercise and physical rehabilitation, and reducing contamination - the research at Great Ormond Street Hospital’s (GOSH) DRIVE Innovation hub offers a new way of communicating for those with restricted physical dexterity and for clinicians to interact with computers without physical contact.

Surprising as it may seem, this touch-free and assistive technology was motivated, in part, by restrictions to surface contact experienced during the COVID-19 pandemic - ostensibly to reduce the spread of pathogens. In hospitals, there are several settings that require sterile environments and access to essential information is often made through communal computers, so it made sense to prototype touchless computing.

How long has touchless computing been around?

Touchless technologies have been in development for more than a decade. If we look back, work was first done by Microsoft on the 2010 Project Natal. This work focused primarily on the Kinect: a depth camera that uses specialised software to track skeletal movement.

Whilst the Kinect was often used in gaming, it was also quite expensive at the time and required assembly of new equipment. A team from Microsoft researched the potential for the Kinect in an operating theatre; this yielded some great feedback and good published papers but never became mainstream.

How was your approach different?

We approached this age-old problem from a different angle. A design principle of ours was to use the tech that we already have and to leave no one behind. MotionInput was built from this.

Firstly, we use a regular webcam, the kind already integrated within existing computers and laptops. There’s no need to install something new or pay for something extra. This was only possible due to advances in machine learning over the past four years.

Secondly, we created a series of modules for different types of gesture inputs. We didn't just replace one or two hand gestures, we developed a suite of hand gestures, including for 3D models, head movements, eye tracking and extremity triggers. We pushed the boundaries to imagine new ways of interacting with computer interfaces.

Thirdly, the project is open-sourced. We believe in this working ethos and look to release early versions of MotionInput in order to receive community feedback and collaboration.

How do you put people at the centre of touchless computing?

As part of early development and intrinsic to the research at the DRIVE Innovation hub at GOSH, we've worked with clinicians from the very beginning. This has been in partnership with the Clinical Simulation Team, radiology and cardiac surgeons who were open to trying out new hand gestures and share potential use cases based upon real world experiences. This forced us to focus on touchless computing from the perspective of people, rather than from the perspective of what the technology could do.

As a result, we designed MotionInput with a sense of humility and to put people front and centre. This meant that we spent significant time listening and adopted an agile way of developing features.

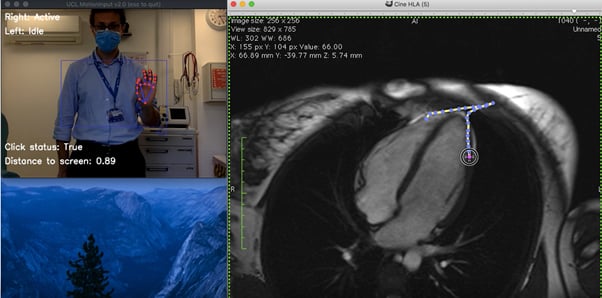

MotionInput v2.0 Hand gestures trialled out by Mr Stefano Giuliano for drawing on examples of imaging in the Clinical Simulation Suite at Great Ormond Street Hospital for Children.

Tell us about your work

I’m fortunate enough to be completing a doctorate out of UCL’s Interaction Centre and the DRIVE Innovation hub at GOSH. It's one of the only spaces I know that exists within a hospital to introduce and try out new technologies, have conversations with policy-makers, involve children and young people and frontline staff.

For you

Be part of something bigger, join BCS, The Chartered Institute for IT.

Most people have no idea of how complex the workforce is and the cross-sector involvement required to deliver high quality care to patients in quite an overstretched system, but also how hard it is to innovate within that space. And so, I research technology adoption strategies, grounded by my experience and securing the voice and perspective of people working in healthcare.

I was always interested in rapid prototyping and so I spend a lot of time translating new concepts into prototypes that can be used to better define market product needs. These new technologies can be difficult to describe.

I often find myself as a conduit between clinical teams and technical teams, each who are quite nervous about entering into a new type of conversation to try and understand concepts that fall outside of their primary training. That’s why these demonstrations and early-stage prototypes are so important, to translate concepts into experiences in a simulated environment and show clinical teams what is possible.

How is the project evolving?

We're having bigger conversations. We're developing out, taking a human-centred and iterative approach for refining the code and we’ve involved some rising stars in computer science to drive this forward. My greatest joy and delight has been to get to know the team. We've gone from a team of 10 up to a team of 44, led by our Engineering Lead at UCL Computer Science. As we look to the future, we anticipate uses for MotionInput in care homes, in schools and in hospitals.

Is computing by gesture hard to learn?

The first thing I'll say, is that it’s magical to see what the technology can do. It’s designed to be intuitive and low latency and so the gesturing translates into real time movement. However, when you try it out yourself it might feel a little bit unusual. Think back, if you can, to the first time you used a mouse, it’s likely you would have overshot where you wanted to move the cursor because you were used to using a keyboard. It's no different with mid-air hand gestures.

We started out with a default set of gestures that everyone could try out - for example if you were to tap your index finger and thumb, that executes a left click. We’ve also developed gestures like an ‘idle state’ to avoid unintended gestures. Moving forwards, we know that people may want to programme their own gestures and so we’ve incorporated the ability to customise gestures across all of the modules.

Does the technology work for people of all races?

The technology shouldn’t be limited by ethnicity. Across our teams we've been quite lucky, we've got a good ethnic and gender balance and so even the developers represent quite nicely a good cross-section of the world. This means that, as gestures are developed, we encourage they are trialled across team members with different skin colours.

Who can get involved?

Our work is publicly downloadable and available to anyone who wants to use it. As I said, this is very much about making it available to everybody. It's an early-stage prototype. We're here to introduce a paradigm shift in Touchless Computing Interactions.