Unseduced by ChatGPT’s apparent abilities, Dr Matthew Shardlow of Manchester Metropolitan University’s Department of Computing and Mathematics explores the current limits of AI.

As conversational AI becomes ubiquitous in our daily technology interactions, you may begin to wonder if you are lost in a sci-fi inspired dream. The ability to describe our intent in natural language and see it realised as images, videos, audio and text-based responses is the stuff of wonder, imagination and, until recently, fiction.

For years, we were mis-sold on the poor-quality interactions of smart assistants. We got used to an AI world that forced us to work hard to reap any valuable interaction (“No Alexa, I didn’t mean to search for ‘art official in telly gents’”).

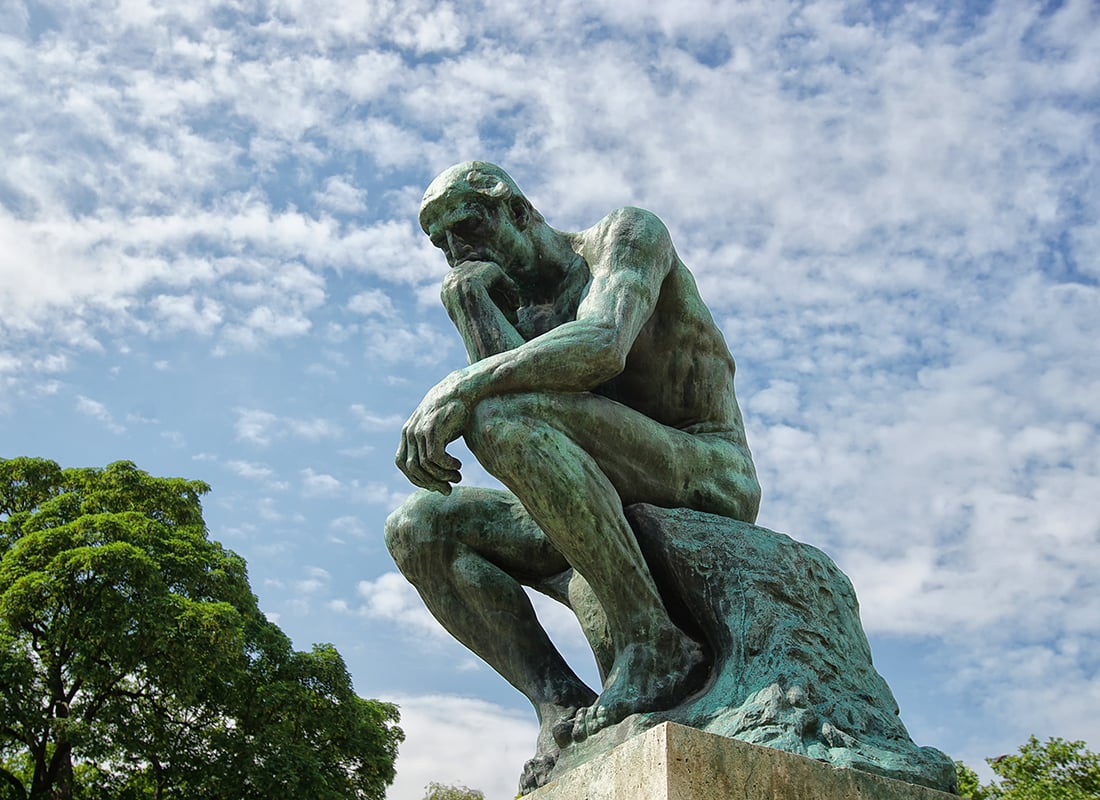

But in the new age of `Generative AI’, we can, in this very moment, interact with our machines as though they understand us. This begs the question — can these machines be considered conscious, or are their seemingly sentient interactions just a mirage of high processing power and complex mathematics?

The inner workings of generative AI

To better understand this question, we must lift the bonnet of the AI model and take a peek inside the engine. Modern AI systems exclusively rely on neural networks, which are bio-inspired mathematical models that can learn to replicate the patterns they are exposed to.

Large Language Models (LLMs) rely on a particular variant of the neural network called the Transformer, introduced by researchers at Google in 2019. The inner workings of the Transformer rely on two types of neural network.

Firstly, a self-attention unit, which allows the model to learn to identify which words contribute most to any given prediction and prioritise the inputs that are most likely to lead to a correct prediction. For example when responding to the prompt ‘what should I eat for dinner?’, the self-attention unit(s) will force the model to give higher weight to words like ‘eat’ and ‘dinner’ than ‘what’, ‘should’ or ‘for’. Secondly is a feed-forward network, which is multiple layers of artificial `neurons’ which pass information from layer to layer.

The power of the Transformer is increased by running the self-attention unit in parallel multiple times over the same input, and by stacking multiple layers of the entire transformer architecture, with the output of one block becoming the input of the next. By doing this, researchers have produced the massive models that we hear about — for example, GPT-3 has 96 parallel self-attention units stacked across 96 layers.

The predictive power of transformer architecture

But how does this attention-driven model lead to the chatbot applications of recent infamy? Consider that any text can be broken down into a simple sequence of tokens (words). Given any partial sequence, then, we can ask the question ‘what comes next?’. The secret is to use the incredible predictive power of the transformer architecture to find the next word in a sentence. The Transformer architecture can be applied to some partial sequence, with the goal of classifying each possible word according to its likelihood of occurring next.

The big advantage of this type of learning is that you don’t need high-quality labelled data as with other machine learning tasks. Instead, you can use any text taken from available sources (such as the internet). When generating a response to a user’s prompt, the model responds word by word, predicting the next token in the sequence at each point. Each generation step takes the original prompt as well as the prior generated responses up to that point and passes the concatenation of both through the entire network.

The process I have described so far generates likely responses to users’ prompts, given the type of data provided during training. However, language models are necessarily constrained by the patterns they have been exposed to during training. This leads to biases within the network. The old adage, `garbage in, garbage out’, has never been truer than when training an LLM.

For this reason, providers of LLMs use a form of training called ‘reinforcement learning’, where humans play the role of the model to write sample responses to prompts. The model is then retrained using these responses to produce similar texts. This means that the final text that you see in the return from a language model is leaning on two sources of human-provided information. Firstly the initial data it was trained on, and secondly the examples of how to respond to prompts. Ultimately, when generating any text, the model is drawing on probable sequences learnt during these two phases of development.

Consciousness and Integrated Information Theory

To decide if a system is capable of consciousness, we draw on Integrated Information Theory (IIT), which describes a set of axioms and postulates for this purpose. This theory has previously been applied in the context of neural networks, and it is easily demonstrable under IIT that a simple feed-forward network is not capable of possessing consciousness.

Particularly, IIT considers that to be conscious, a system must attain a sufficient level of measurable complexity, and a feed-forward network does not possess enough connectivity to be considered a complex system. Whilst each layer is connected to the next, the connections are unidirectional, so there is no capacity for the system to attain a similar level of complexity to a biological conscious system (such as the brain).

We can apply IIT to a Transformer-based LLM using the following argument. Firstly, consider that the Transformer architecture is made up of feed-forward networks and self-attention. Neither of these units could be considered sufficiently complex to warrant consciousness under IIT. To be considered, there would need to be some external interconnectivity between units, causing an increase in complexity.

For you

Be part of something bigger, join BCS, The Chartered Institute for IT.

However the connections between the attention units are linear, as is the connection between each layer of the architecture resulting from the output of the feed-forward network. There is no inter-connectivity. The increase in complexity by chaining layers is minimal and we cannot consider a single transformer block to be conscious. We can make a similar argument that consciousness cannot arise during the language generation stage as there is a similar linearity in the connections between each step. Therefore, according to IIT, any LLM following the Transformer architecture cannot be considered sufficiently complex to possess consciousness.

This argument is backed up in empirical observation of the capabilities of available LLM chatbots. Try asking ChatGPT to write poetry, provide factual information or rewrite the tone of a text, and it will perform well. These are tasks based on information sources it has been trained on and likely that it has been tuned to perform through reinforcement learning. However, if you request to play chess, describe grief, write a (new) palindrome or verify a fact it will quickly fail.

This is not just an artefact of ChatGPT’s training, but results from a fundamental limit on the capacity of LLMs: there is no evidence that learning to predict the next word in a sequence can give rise to the capacity for logic, perception, planning or veracity, all of which are fundamental facets of the human experience and necessary outcomes of model consciousness. There are certainly breakthroughs to come in each of these areas, but the current abilities of LLMs are no more likely to result from consciousness than that of 1960’s chatbots like ELIZA (the LISP-based rule-driven Rogerian psychologist).

Is consciousness on the cards?

Can a language model be conscious? No, not today. They are simply not derived from a complex enough architecture. What’s more, they fail in simple tasks that require perception and reasoning. Language models are deeply sophisticated pieces of technology running on incredibly powerful hardware, with a plethora of potential societal benefits and ills. To make those benefits outweigh the potential harms, we must approach this new technology with a correct understanding of its fundamental principles, capacities and limitations.