Big data has been an ongoing subject for quite few years. Big data is frequently used as a problem statement rather than as a technology itself. Big data refers to the methods and tactics applied to manage large volumes of data, as well as performing analytics on this data, which in turn needs to bring value to those who need its information. Examples include data from: retail, banking, insurance, transport, which also includes supply chain activities.

Over the years various methodologies and techniques have been applied to dig deep into this vast information body and in this article we’ll discuss two specific architectural directions and how they meet the big data needs.

The data warehouse route:

In this route, the overall big data architecture is built on one or more data-warehouses. A data-warehouse is a storage area of data where information is captured in a structured format so that analysis can be performed to produce meaningful information. A business intelligence application (example: QlikeView/QlikSense, Tableau, SAP business objects, etc.) can be used to produce analytical reports on the data stored on the data warehouses. Facts/ dimension tables are organised into star, snowflake or other well know database schemas to organise the data in order to meet business needs.

To take this step further, we may have a series of data marts, which are lower level data warehouses and hold subject specific data that might be of interest to specific business needs (e.g.: for a specific department, or line of business). The data from the above data warehouse would be extracted, transformed and loaded into these data-mart buckets. We could also introduce data vaults, which may use the satellite-hub-link data model to store historical information that the business might need as part of its business prediction. A data lake is vast body of unstructured, semi structured and structured data that the business may need to perform predictive analysis. Database technologies, like Apache Cassandra, and Mongol, support the use of the Nosql querying language to perform database queries.

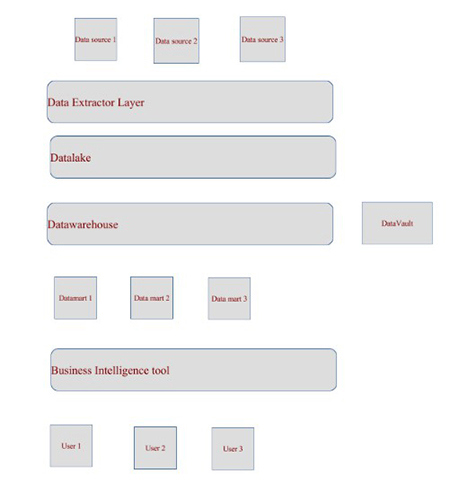

The diagram below is an example of a layer-by-layer approach to help reap the benefits of the stored data. The top layer consists of upstream systems and there would be various data source endpoints here. The data extractor layer could be used as a middleware application (e.g.: middleware application – enterprise service bus) to performs data extraction, transformation and loading into the data lake layer. Real time information and batch processed information could be gathered here. Data scientists could perform data analysis on this layer to get deep insights into this vast ocean of information.

Information from a data lake would undergo ETL (extract, transform, load) jobs using technologies such as Talent or informatica and dumped into the data warehouse and data vault. Since the data warehouse and the data vault serve different purposes the data could be sitting in both these buckets separately. Further ETL or any other jobs could be performed to move data from the data warehouse to the data marts. Finally, the users are able to use the business intelligence application to perform predictive analysis on the data marts and data vault if need be.

The Hadoop route:

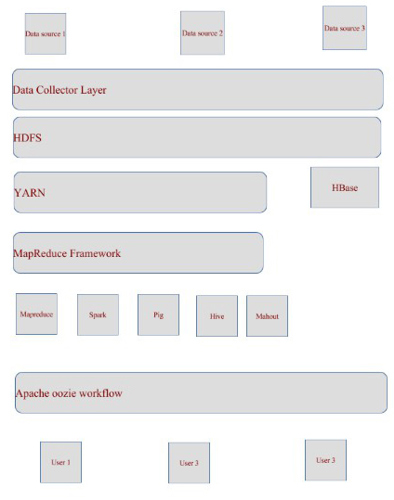

Hadoop is one of the most widely used big data infrastructures used and really helps reap the benefit of performing analytics on large volumes of information. The core elements of hadoop infrastructure include: HDFS (the hadoop file system, this is a write one, read many times file system), YARN (yet another resource negotiator), HBase (the Nosql database) and the hadoop core libraries. One of the advantages of using Hadoop as an infrastructure is that it is a technology agnostic analytical platform and has the ability to support multiple technologies to perform data analytics. MapReduce is an application that is widely use to perform analysis on the data stored in Hadoop. The following is a high-level architecture of the hadoop infrastructure based on hadoop 2.0 version.

One of the main differences between the data warehouse route and the hadoop route is that the key areas that would provide the type of information captured by data lake, data warehouse, data mart and data vault layers are performed within the hadoop (HBase and HDFS layers) as this where data is captured in either tabular format or unstructured format. Data ingestion happens into the HDFS from the data source endpoint via data collectors such as Flume, Sqoop, HDFS commands, and Kafka, among other methods that are beyond the scope of this article.

All users that need to perform the analysis on the data would use technologies like: MapReduce, Spark, Storm, Pig, Hive (data warehouse tool), Mahout (machine learning) among others. All these technologies would send the jobs to YARN which will then allocate containers with appropriate RAM and disk space to execute these jobs using compilers (e.g.: JVM). The containers are allocated to the different nodes within a node cluster. Apache Oozie would be used as a workflow system to coordinate these technologies in sequence, as per business needs, before they are sent to YARN for processing. This is a high-level view of the Hadoop infrastructure, but there are various other elements that would perform different functions that are specific to different use-cases.

Big data, as a domain, has the potential of plugging into other domains such as the internet of things, artificial intelligence, RFID technologies among others. The IoT and RFID can be plugged into the data extractor (ESB for the data warehouse route) and data collector (Flume, Sqoop, or other methods for the Hadoop route) to feed and consume real-time and batch jobs. Through the data extractor or data collector layer, the data is consumed or fed from/to the data warehouse or HDFS layer respectively. The artificial intelligence layer can be plugged into the analytics layers of both architectures to read and interpret information stored and this can be used as training data during the machine learning processes. The architecture of how this can achieved is beyond the scope of this article.

So, in-conclusion, the big data domain has been a growing trend and is opening doors to other domains as well. There are various architectures applied out there and this article has introduced two different approaches that can be applied in practise.