With the increase in popularity and usability of AI, Martin Wheatman PhD MBCS explores algorithms, how they work and how Alan Turing’s research can help our understanding.

For 75 years, algorithms have been contained within computers; understanding how they work requires the ability to read source code. The algorithm may have reached public consciousness; but how it does what it does, typically, remains a mystery. By exploiting the Turing Completeness of speech, it is in fact possible to shine a light into this hidden world.

Making software readable began with Kathleen Booth’s idea for an assembly language: 3- or 4-letter codes – mnemonics - representing each binary machine instruction. COBOL, the first compiled ‘high-level’ language, had readability at its heart with a natural language syntax. However, source code evolution has resulted in greater brevity, and readability has taken a back seat. With the advent of graphical user interfaces, the already obscure algorithm disappears completely.

Despite our growing reliance on algorithms, particularly in social media and AI, there remains hope. Ordinary Language Philosophy (OLP) asserts that speech contains information in the words used, not in some underlying logic. If so, algorithms could be written in plain language and would be readable. The key is how speech, itself, functions: how it is Turing Complete.

Alan Turing defined the essence of a computer in his 1937 paper On Computable Numbers 1. He noted that instructions have the same – universal - form as data, and so can be stored in memory. Since 1948 these instructions have been called a stored program 2. It is the ability of one such Turing Machine to simulate another, for a set of instructions to work as a set of instructions, is known as being Turing Complete.

Speech can certainly describe a process, as in Euclid’s Algorithm: keep taking the lesser of two numbers from the greater, and you will reach a number which is the common divisor of both. So why aren’t we computing already with speech systems? The problem is that a chatbot, such as Eliza or Alexa - the de facto standards for speech systems - cannot be a Turing Machine because they distinguish between the data and the way it is processed: they lack Turing’s universality. Defining new words or phrases would require more source code. To be Turing Complete, they would need to process speech using speech.

For you

Be part of something bigger, join BCS, The Chartered Institute for IT.

Speech, itself, has this universal property: it is both data and action; both are encoded in what is being, or has been, said. Ogden and Richards noted, way back in 1923, that speech’s building blocks are entire utterances: ‘words mean nothing in themselves …’3. In the tutoring of maths, for example, times tables are learnt by rote: 2 times 2 is 4. This represents an atomic, symbolic, relationship between what is said and its response: there is no need for a logical proof. This fundamental building block can be combined to form a functioning (a Turing Complete) system, by virtue of the following properties:

- Universality – both action and data are grounded in utterances: meaning is not found in individual words, but in what (further) utterances are implicated 5. Compare: ‘I have an apple’, with, ‘I have a dream’;

- Conditional processing – provided by the idea of ‘felicity’ 4. In practice, this is performed whenever we prefix utterances with: ‘if so, …’, or, ‘if not, …’.

- Processing loops – are created by recursion: realised, for example, in the phases the ‘first of these’ and ‘the rest of these’, when processing a list. One is processed and the rest is reduced by one, until ‘the rest’ becomes a list of one.

All these properties are demonstrated by the open source tool Enguage. Winner of the BCS SGAI Machine Intelligence Competition in 2016, it simply describes speech in further speech, making the algorithm readable and transparent. It can simulate numeric algorithms, such as recursive factorials 6 to FizzBuzz (with examples of recursion in 8):

On "what is the fizzbuzz of N":

is N divisible by 5 and 3;

if so, reply "fizzbuzz";

is N divisible by 5;

if so, reply "buzz";

is N divisible by 3;

if so, reply "fizz";

reply "N".

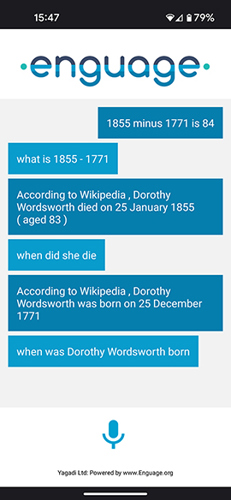

However, the real power of speech processing is when processing text. Enguage deterministically accesses values in Wikipedia, thus:

On "when was PHRASE-PERSON born":

get the PERSON page from wikipedia;

if not, reply "sorry, I don't know PERSON";

find the table header containing born;

if not, say so;

retrieve the date from the next table cell;

if not, say so;

reply "According to Wikipedia, PERSON was born on ...".

This can, in turn, be combined to support further speech, such as in 7:

On "how old is PHRASE-PERSON":

when did PERSON die;

if not, when was PERSON born.

A decade after its initial development, the technical abilities of Enguage are becoming more apparent, and the evidence to demonstrate this is growing. Its test suite contains over 550 test cases; and the task of fully defining a language, in natural language, is underway as a machine-accessible dictionary. Disambiguation is fully described in 9. Learning language can be as simple as saying, e.g. to the phrase ‘thank you’, reply ‘you’re welcome’.

By writing software in natural language, we plainly expose our algorithms. However, organisations are not obliged to write such code; we may only point the way to transparency. Indeed, it may be more efficient to keep mathematical algorithms, such as neural nets or chess programs, encoded in machine code. However, this technique might prove useful where transparency is paramount; perhaps in replacing non-deterministic use-cases, to produce a more intelligible and trustworthy interaction?

Further reading:

1 “On Computable Numbers”, by Alan Turing, in “The Annotated Turing” by Charles Petzold (2008)

2 The first stored program, in the ‘Manchester Baby’, calculated Euclid’s Algorithm, 21st June, 1948

3 “The Meaning of Meaning”, by C. K. Ogden and I. A. Richards, pp 9-10 (1923)

4 “How To Do Things With Words”, by J. L. Austin (1962)

5 “Studies in the Way of Words”, by Paul Grice (1989)

6 “Programming without Program”, in IT Now (March 2018)

7 See: Rosetta Code - Category:Enguage

8 See: Bitbucket.org

9 See: “A Pragmatic Approach to Disambiguation” DOI: 10.1007/978-3-319-42102-5_16