It used to be so easy to cool a data centre. Your power-hungry mainframe came with its own cooling system, so all that you had to worry about were the operators and some ancillary equipment such as terminals and tape drives. A nice, uniform temperature throughout-that's what you needed.

Things didn't get much worse when distributed computing began to make its mark. You had a collection of desk-side or desktop workstations that you probably put in a cabinet. All that was necessary was to introduce some air at the bottom and close the doors; systems were designed to be comfortable if their users were, so a nice, uniform temperature was still the order of the day.

With the advent of high-density servers, blades and the like, things began to change. These systems are people-compatible only at their fronts; their outlet temperatures are often uncomfortable for users. Now the whole philosophy of data centre cooling had changed, and uniform temperatures in the data centre were a thing of the past. Even if you wished to achieve uniform temperatures, it would now be nigh on impossible. Instead, you must manage the cooling of the data centre to avoid hot spots and potentially damaging equipment overheats.

To achieve this, one must abandon the previous tenets of air conditioning and discard the old concepts of mixing air, favouring instead a highly disciplined segregation of your cooling air from that which has already been heated.

There are many benefits to managing your cooling resources this way. You can eliminate hot spots in your data centre, maintain air return temperatures to your cooling equipment for maximum efficiency of your cooling plant, and potentially increase your capacity. This, however, all comes at a price.

First steps

There are specific and inexpensive actions you can take to use your cooling air more effectively. These disciplines are well known but, surprisingly, often ignored, or have fallen by the wayside-which makes them worthy of mention.

Rack doors

Using doors on a rack only impedes the flow of air through servers. For instance, a 4kW server needs approximately 17m3 of cooling air per minute, so a door in front of the server is likely to cause problems.

Hot and cold aisles

All too common is overheated equipment cooled by air vented from other servers, since hot and cold aisles were not kept separate as the data centre grew. In the field, it has been known for spent air from one rack to pass through two others on its return to the air conditioning equipment. This air was well in excess of recommended inlet temperatures for the equipment even before its transit through the final bank of servers.

Preventing bypass

When equipment is installed or replaced, the placement of blanking panels in the un-used parts of a rack is often neglected. These holes in the rack allow unused cooling air to transit straight to the hot aisle without performing any useful work. Even worse, they allow the ingress of spent air into the cold aisle, raising the temperature there.

Of similar concern are the posts in the rack themselves. These should be solid, or have any apertures covered, to prevent movement of air through the rack along the sides of the servers.

Cable cut-outs in the floor should be sealed to prevent air entering the cabinet from below, even when a cabinet requires cooling air to be delivered there. When you have a cabinet requiring cooling from below, this should be delivered in a managed way, not ad hoc.

Placement of ventilated tiles

One hangover from the days of yore when water-cooled mainframes were king is floor tiles that were placed for the comfort of operator-along walkways, near the areas where operators worked etc. In today's data centre, this is simply an unaffordable and wasteful luxury. Tiles should be placed where they can deliver cold air to intakes of equipment or prevent the entry of hot air-and used for very little else. Especially wasteful is the placement of a ventilated tile in a hot aisle.

Building

The first steps outlined above are building blocks for a good data centre. They are also the simplest to implement, even in a data centre with a legacy design. In practice, these improvements are assessed using computational fluid dynamics (CFD) models, since changes in airflow are otherwise difficult to visualise. Even a few years ago the cost of such modelling would have been prohibitive. With data centre-specific CFD packages, coupled with today's personal computers, such modelling is practical whether you are designing a new data centre or planning changes to an existing one.

Whilst the benefits of applying the disciplines are tangible, you can achieve much larger improvements in the airflow design of your data centre.

Efficient hot air evacuation

Once the air has been heated by a server, it has no further purpose in a data centre and should be contained to prevent mixing and then removed as quickly as possible. The most effective way to achieve this is with a physical barrier-a hot-air return plenum.

The simplest logical arrangement is to situate vents to the plenum above the hot aisles to draw the air directly into the plenum after it has been used. This common arrangement can also be the basis for much more effective use of the available cooling air.

Of course, adding a hot-air return plenum to a running data centre is neither trivial nor inexpensive, and has the potential to cause major disruption. Further, the enclosed space will need fire suppression and monitoring equipment, thus adding to the cost.

Planning rack placement

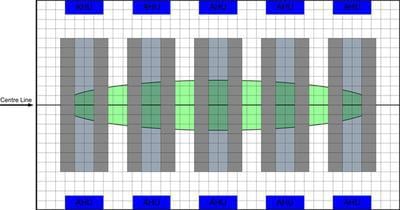

As server cooling requirements grow, it becomes increasingly necessary to place racks where the cooling air is available. In a traditional data centre design, with a rectangular room with computer room air conditioners (CRACs) or air handling units (AHUs) placed along the long edges such that they oppose each other, then the equipment with the greatest cooling requirement would probably be placed near the mid-point of the room, where the ability to deliver air is highest.

Why probably? There are many factors that affect the delivery of air from beneath the floor including the physical dimensions of the room, under-floor obstructions and the throw of the air from the air conditioning equipment. Again, computer modelling of the relevant airflows is the solution to visualising and understanding the behaviour of a given data centre - but a solution that often provides counter-intuitive results.

Figure 1. A hypothetical traditional data centre showing the region where the under-floor pressure is likely to be highest

Positive containment of used air

Even with careful placement of the equipment and evacuation of the hot air via a return plenum, hot air can still contaminate a cold aisle by entering from the top or ends of a row of racks. Therefore, it still may not be possible to adequately cool modern high-density servers due to ineffective use of the cooling mass. You can counter this by marshalling the cooling and spent air to further restrict mixing. Conceptually, you can achieve this by placing partitions from the top of a row of racks to the ceiling and enclosing the ends of the aisles. This would only normally be done for either the hot or cold aisles, but modelling shows that enclosing a hot aisle is marginally more efficient.

For rooms where there is no realistic chance of a hot-air return plenum being available to remove the air from the hot aisle, you can use alternative techniques such as partial hot aisle enclosure to conduct the host air to the ends of rows of racks and cold aisle containment.

Aisle enclosure is not, however, without its drawbacks. Creating such an enclosed space may, depending exactly where it is, constitute a fire zone and require independent monitoring and fire suppression. Further, the hot aisle has now become a very unpleasant place to work, with uncomfortable temperatures. This is, of course, of no consequence to the IT equipment, but could be a significant people management issue.

The next step: avoiding the drawbacks

The hot-aisle enclosure is a very effective method of controlling your spent-air return, but has some significant problems. However, by extending the concept, it is possible to make even more effective use of your cooling air and avoid the regulatory and people issues that they create.

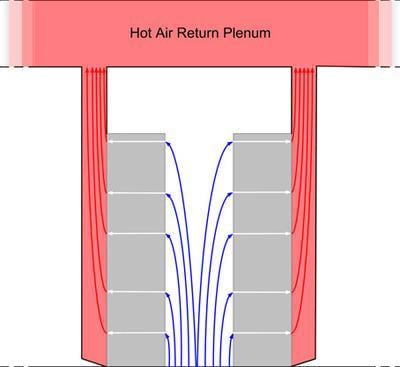

Should the width of the hot aisle allow, the key to this is to reduce the size of the enclosure such that it serves exactly one rack - effectively adding a chimney to connect the rear of a rack directly to the hot-air return plenum. The spent air is thus completely contained without creating zones.

Figure 2. A conceptual view of chimneys attached to the rear of racks

This is not quite the panacea it would seem to be. Ignoring the capital cost of the structure itself, the temperature of the air in the plenum in a high-density data centre may well now be too high for the cooling infrastructure to effectively handle, even though the cooling plant may not be near capacity.

This situation can occur precisely because the hot and cold air masses do not mix. It is therefore time to break one of our newfound rules and allow some cold air to mix with the hot air mass through a strategically placed vent. (It really is possible to have too much of a good thing.)

The conservative new world

In a world with soaring energy costs, carbon taxes, and growing awareness of ecological impacts, it is irresponsible to not effectively manage the limited resources at our disposal; cooling air is one of these resources. Conservative use of cooling air to maintain the correct operating environment for computing infrastructure reliably can result in significant energy savings and improve the mean time between failures (MTBF) of servers. Combining measures such as those described here with free air, wet-side economisers and other cooling system improvements can make a substantial impact.

It is not enough, however, to implement these measures blindly. Managing airflows is notoriously difficult, since air has a tendency to flow almost everywhere except where you wish it. Successfully managing your data centre and its airflows can enable your data centre to be a key part of your competitive advantage instead of constraining your business performance. Modelling and simulating changes, coupled with experience, allow you to develop a more efficient facility to support tomorrow's business challenges. Finally, carefully adhering to thermodynamically sound cooling management techniques often provides a cost-effective route to data centre capacity increase.

Dave Woodman is senior consulting engineer at Intel® Solution Services.